The Great AI Integration Problem: Why MCP Servers Matter More Than Your LLM

- elixion solutions

- Sep 2, 2025

- 5 min read

Every enterprise AI conversation starts the same way: "Which LLM should we use?" GPT-4? Claude? Llama? The obsession with model selection has reached fever pitch, with companies spending months evaluating nuanced differences in reasoning capabilities, token costs, and safety measures.

Here's the uncomfortable truth: your choice of LLM is probably the least important decision you'll make in your AI implementation. The real challenge isn't finding the perfect model; it's connecting that model to the messy, complex, legacy-laden systems that actually run your business.

Welcome to the Great AI Integration Problem, where MCP servers might just be the solution nobody's talking about.

The Hidden Iceberg of AI Implementation

The AI industry has sold us a beautiful lie: that intelligence is the bottleneck. Deploy a powerful enough LLM, and watch your productivity soar. In reality, the most sophisticated AI model becomes useless the moment it needs to interact with your actual business systems.

Consider a simple use case: an AI assistant that helps customers check order status. Sounds straightforward, right? The AI needs to:

Authenticate the customer

Query the order management system

Cross-reference inventory data

Check shipping provider APIs

Access customer service history

Update interaction logs

Trigger follow-up workflows

Suddenly, your "simple" AI assistant requires integration with six different systems, each with its own API structure, authentication method, rate limits, and failure modes. The LLM might be brilliant at natural language processing, but it's completely helpless when your legacy CRM returns a 500 error.

The Custom Integration Trap

Most organisations approach AI integration the same way they've always approached system integration: build custom connections point-to-point. Need the AI to access Salesforce? Build a custom connector. Want it to query your data warehouse? Another custom integration. Email system? Yet another bespoke solution.

This approach creates what we might call "integration debt": a growing web of custom code that becomes increasingly expensive to maintain. Every system upgrade breaks something. Every new AI use case requires building new connectors. Every security requirement needs to be implemented across dozens of integration points.

The total cost of ownership explodes. Companies that expected AI to reduce operational complexity find themselves drowning in maintenance overhead for integration infrastructure that's invisible to end users but critical to operations.

The Standardisation Imperative

The software industry has seen this movie before. In the early days of web development, every application built its own HTTP handling, session management, and routing logic. Then frameworks emerged that standardised these common patterns, allowing developers to focus on business logic rather than infrastructure plumbing.

AI integration is at that same inflection point. We need standardised protocols that handle the common patterns of AI-to-system communication, allowing organisations to focus on AI applications rather than integration engineering.

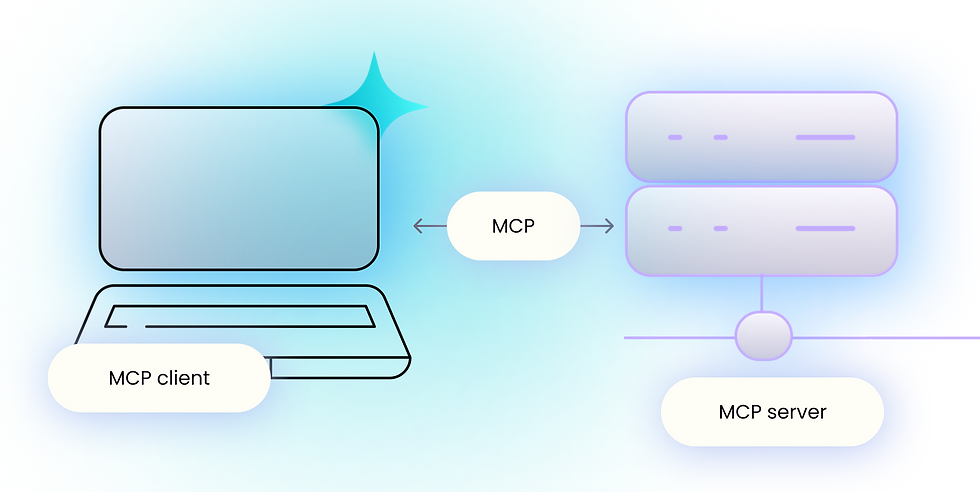

Enter Model Context Protocol (MCP) servers.

MCP Servers: The Missing Infrastructure Layer

MCP servers act as intelligent intermediaries between AI models and business systems, providing a standardised interface that abstracts away the complexity of system-specific integration challenges.

Instead of building custom connectors for every AI-system interaction, organisations deploy MCP servers that handle:

Unified Authentication: Single authentication model across all connected systems, with proper credential management and rotation.

Protocol Translation: Converting between AI model requests and system-specific API calls, handling differences in data formats, pagination, and response structures.

Error Handling and Resilience: Consistent error handling, retry logic, and circuit breaker patterns across all integrations.

Rate Limiting and Resource Management: Preventing AI applications from overwhelming backend systems with requests.

Audit and Compliance: Comprehensive logging of all AI-system interactions for security, compliance, and debugging purposes.

Context Management: Maintaining conversation context and system state across complex, multi-step AI workflows.

The Economics of Standardised Integration

The financial case for MCP servers becomes clear when you consider the true cost of AI integration:

Development Costs: Custom integrations require specialised knowledge of both AI systems and target business applications. MCP servers eliminate this dual expertise requirement.

Maintenance Overhead: Every system update or security patch potentially breaks custom integrations. MCP servers centralise this maintenance burden.

Scaling Complexity: Adding new AI applications or connecting to additional systems requires exponentially more custom integration work. MCP servers scale linearly.

Security and Compliance: Managing security across dozens of point-to-point integrations is complex and error-prone. MCP servers create a single, auditable control plane.

Time to Market: Custom integrations often take months to develop and test. MCP servers can reduce implementation time from months to weeks.

Consider a mid-sized company implementing AI across five business functions, connecting to 20 different systems. Custom integration might require 50+ separate connectors, each requiring development, testing, and ongoing maintenance. MCP servers could reduce this to 5 MCP implementations and 20 standardised connections.

Real-World Implementation Patterns

Early adopters are discovering several compelling MCP server deployment patterns:

The Integration Hub: A single MCP server handling all AI-system connections for a department or business unit, providing centralised control and monitoring.

The Specialised Gateway: MCP servers optimised for specific system types (CRM, ERP, data warehouse), allowing for deep integration with complex business applications.

The Security Proxy: MCP servers focused primarily on security and compliance, ensuring all AI interactions meet enterprise security standards.

The Development Accelerator: MCP servers that provide mock APIs and test environments, allowing AI application development to proceed independently of system availability.

The Competitive Advantage Hidden in Plain Sight

Here's what most organisations miss: AI competitive advantage doesn't come from having slightly better models. It comes from deploying AI applications faster, more reliably, and at lower cost than competitors.

Companies that solve the integration challenge first will deploy AI solutions at dramatically higher velocity. While competitors are still building custom connectors for their third AI use case, MCP-enabled organisations are deploying their twentieth.

This isn't about technology for technology's sake. It's about business agility in an AI-powered world.

The Path Forward

The shift toward MCP servers represents a maturation of the AI ecosystem. Just as web frameworks allowed developers to stop reinventing HTTP handling, MCP servers will allow organisations to stop reinventing AI integration.

The question isn't whether this standardisation will happen. The question is whether your organisation will be an early adopter that gains competitive advantage, or a late follower that struggles to catch up.

The companies that recognise integration as the real AI challenge, and invest in solving it systematically rather than repeatedly, will find themselves with a sustainable competitive advantage that has nothing to do with which LLM they choose.

Your LLM will be obsolete in 18 months. Your integration architecture will determine your AI success for the next decade.

Making the Shift

For organisations ready to move beyond the LLM obsession, the path forward involves:

Assessment: Audit existing and planned AI use cases to understand integration requirements.

Architecture: Design MCP server deployment patterns that match your organisational structure and security requirements.

Implementation: Start with high-value, low-complexity use cases to build internal expertise.

Scaling: Expand MCP coverage systematically, prioritising integrations that enable multiple AI applications.

Optimisation: Continuously monitor and optimise MCP performance, security, and reliability.

Conclusion

The Great AI Integration Problem won't be solved by better models or more sophisticated prompting techniques. It requires treating integration as a first-class architectural concern, worthy of the same attention and investment that organisations give to their AI models.

MCP servers represent the emergence of enterprise-grade AI infrastructure. They're not the flashy, headline-grabbing part of AI implementation, but they might just be the most important.

The future belongs to organisations that understand this distinction and act accordingly.

Comments